Recently, great progress has been made in video generation technology, attracting the widespread attention of scholars. To apply this technology to downstream applications under resource-constrained conditions, researchers often fine-tune pre-trained models based on parameter-efficient tuning methods such as Adapter or Lora. Although these methods can transfer source domain knowledge to the target domain, fewer training parameters lead to poor fitting ability, and the source domain knowledge may cause the inference process to deviate from the target domain.

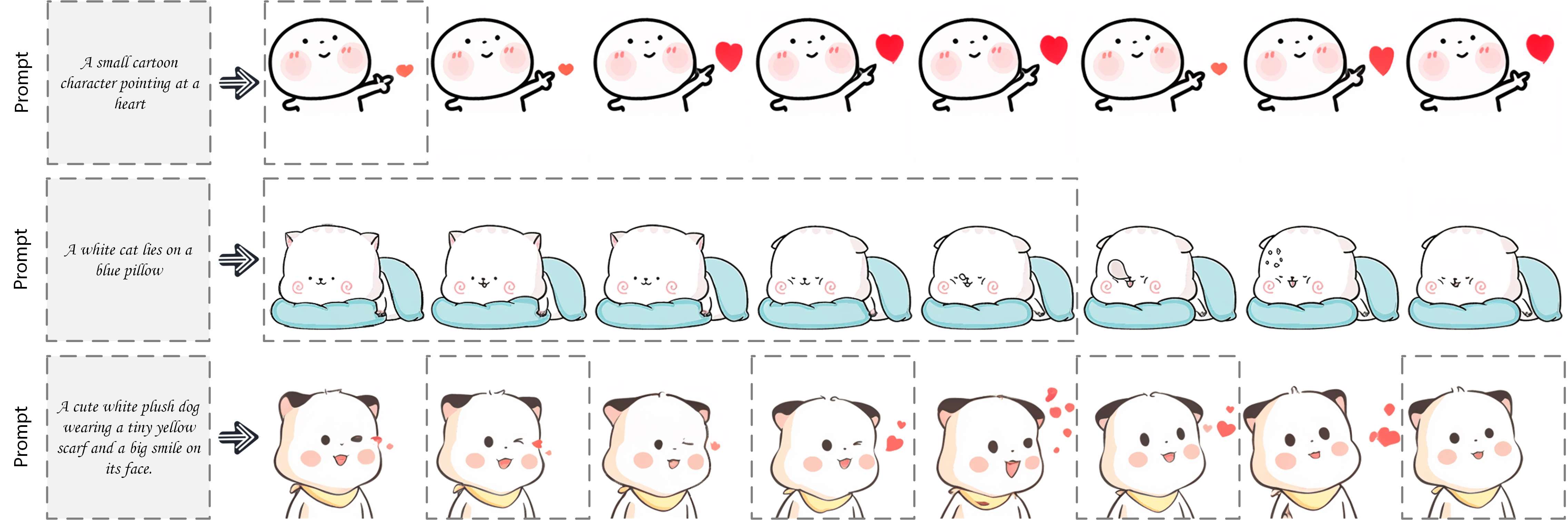

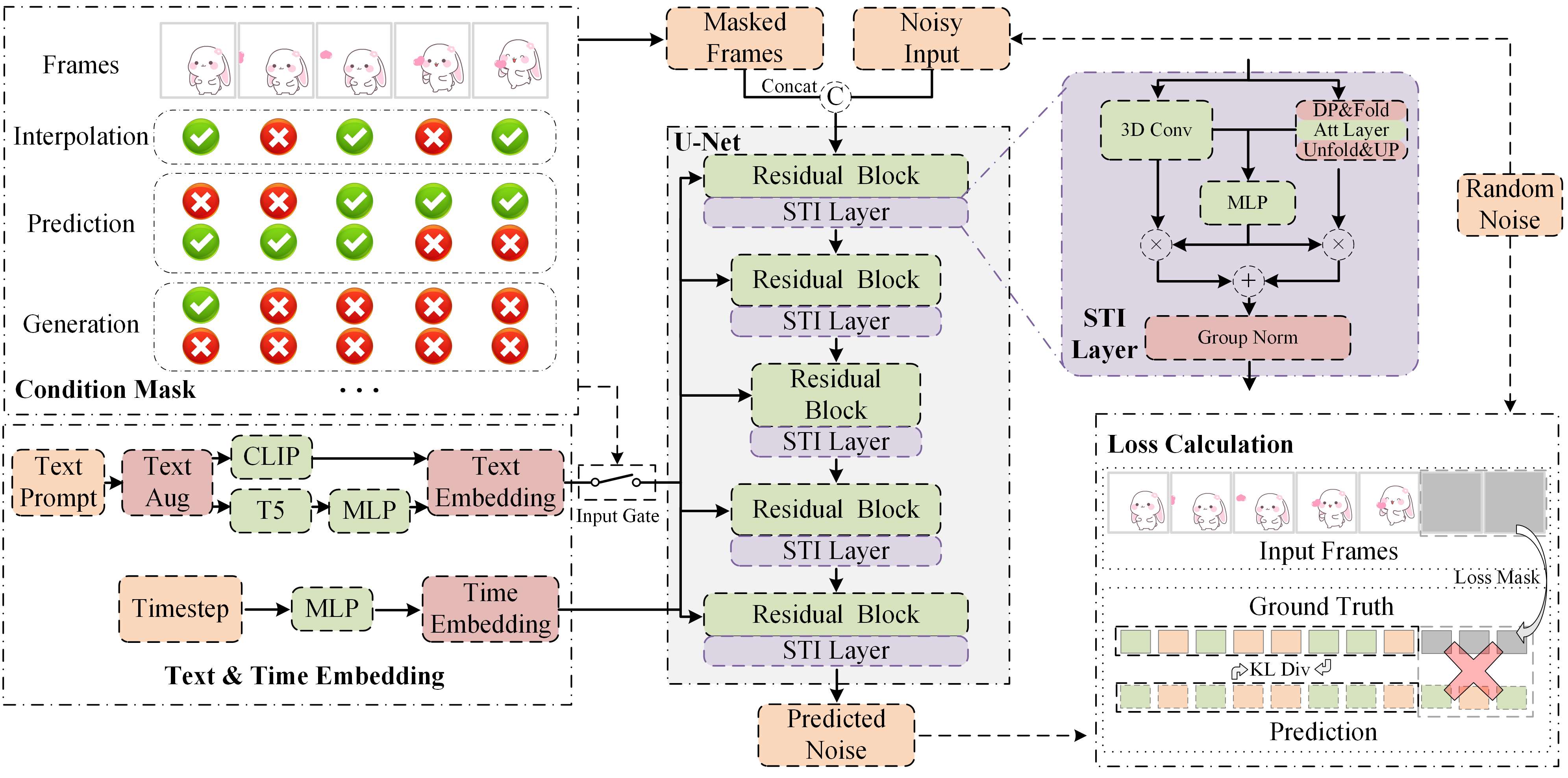

In this paper, we argue that under limited computing resources, training a smaller video generation model from scratch using only million-level samples can outperform parameter-efficient tuning on larger models in downstream applications: the core lies in the effective utilize of data and curriculum strategy . Take animated sticker generation (ASG) as an example, we construct a discrete frame generation network for stickers with low frame rates, ensuring that its parameters meet the requirements of model training under limited resources. In order to provide data support for models trained from scratch, we come up with a dual-mask based data utilization strategy, which manages to improve the availability and expand the diversity of limited data. To facilitate convergence under dual-mask situation, we propose a difficulty-adaptive curriculum learning method, which decomposes the sample entropy into static and adaptive components so as to obtain samples from easy to difficult. The proposed resource-efficient dual-mask training framework is quantitatively and qualitatively superior to efficient-parameter tuning methods such as I2V-Adapter and SimDA on the ASG task, verifying the feasibility of our method on downstream tasks under limited resources.

| Inputs: |  |

| Outputs: |  |

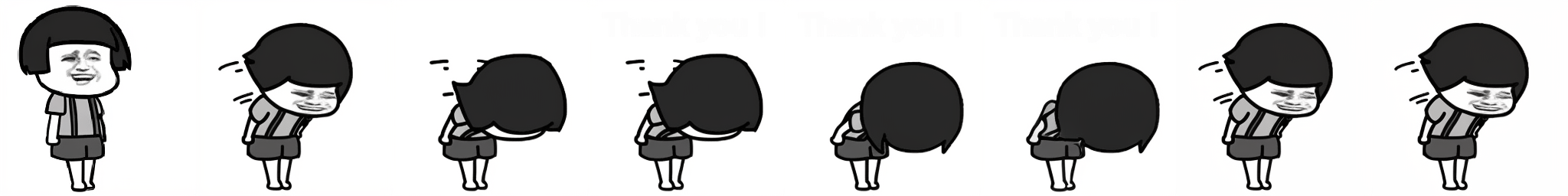

| Inputs: |  |

| Outputs: |  |

| Inputs: |  |

| Outputs: |  |

| Inputs: |  |

| Outputs: |  |

| Input Text: | A small cartoon character pointing at a heart |

| Ref Img: |  |

| Outputs: |  |

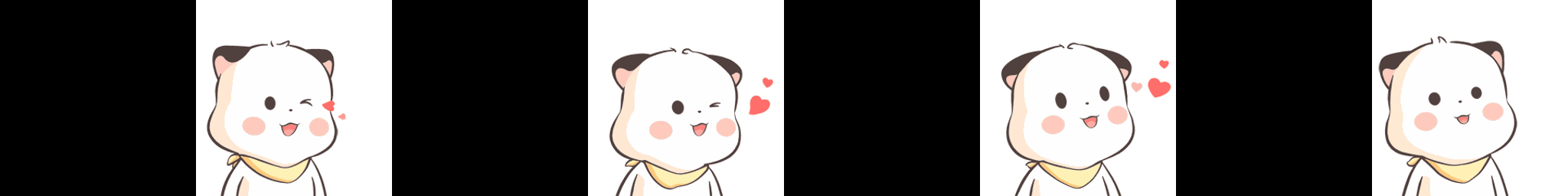

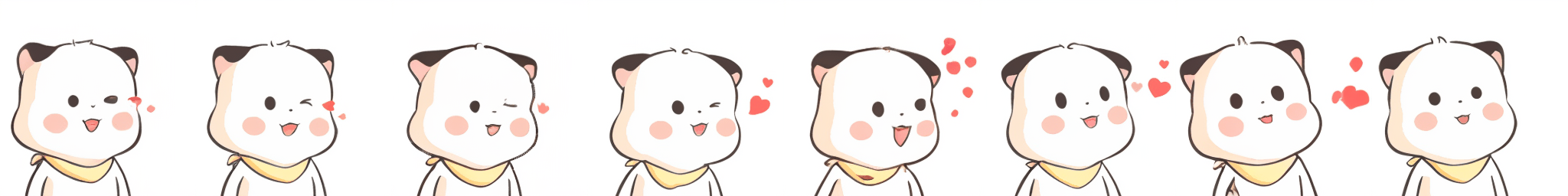

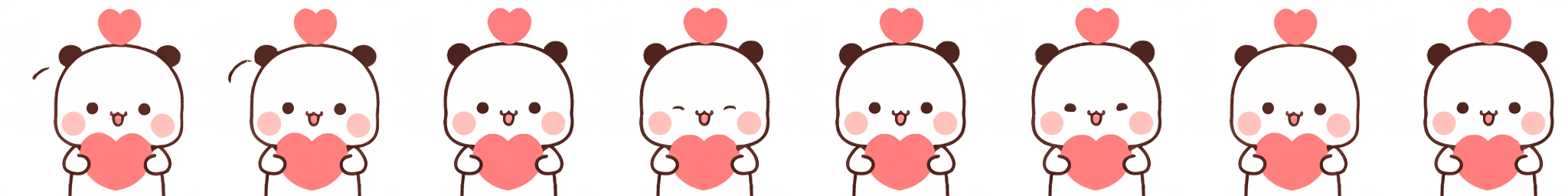

| Input Text: | A cute white cartoon teddy bear holding a heart in his hands |

| Ref Img: |  |

| Outputs: |  |

| Ref Image | Ref Text | Customize-A-Video [1] | I2V-Adapter [2] | SimDA [3] | Ours |

|

A cute little girl in a pink bunny hat showing surprise and joy |  |

|

|

|

|

A small cartoon character pointing at a heart |  |

|

|

|

|

A yellow smiley face with two thumbs up, symbolizing approval, satisfaction or enthusiasm |  |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

If you use our work in your research, please cite:

@misc{anonymous2025RDTF,

title={Resource-Efficient Dual-Mask Training Framework for Animated Sticker Generation},

author={Anonymous},

archivePrefix={arXiv},

primaryClass={cs.CV}}